Data center tweaks could unlock 76 GW of new power capacity in the US

[ad_1] Tech companies, data center developers, and power utilities have been panicking over the prospect of runaway demand for electricity in the U.S. in the face of unprecedented growth in AI. Amidst all the hand wringing, a new paper published this week suggests the situation might not be so dire if data center operators and other heavy electricity users curtailHow Rocket Companies modernized their data science solution on AWS

[ad_1] This post was written with Dian Xu and Joel Hawkins of Rocket Companies. Rocket Companies is a Detroit-based FinTech company with a mission toAWS and DXC collaborate to deliver customizable, near real-time voice-to-voice translation capabilities for Amazon Connect

[ad_1] Providing effective multilingual customer support in global businesses presents significant operational challenges. Through collaboration between AWS and DXC Technology, we’ve developed a scalable voice-to-voiceDream, Truth, & Good — AI Alignment Forum

[ad_1] One way in which I think current AI models are sloppy is that LLMs are trained in a way that messily merges the following "layers":The "dream machine" layer: LLMs are pre-trained on lots of slop from the internet, which creates an excellent "prior". The "truth machine": LLMs are trained

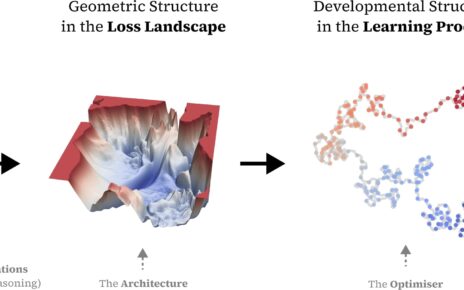

Timaeus in 2024 — AI Alignment Forum

[ad_1] TLDR: We made substantial progress in 2024:We published a series of papers that verify key predictions of Singular Learning Theory (SLT) [1, 2, 3, 4, 5, 6].We scaled key SLT-derived techniques to models with billions of parameters, eliminating our main concerns around tractability.We have clarified our theory of change

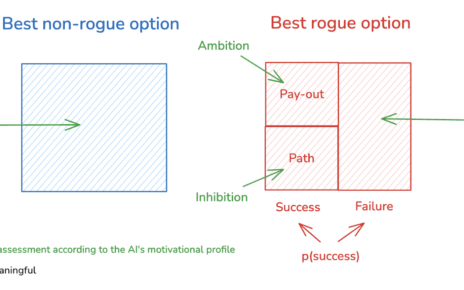

When should we worry about AI power-seeking? — AI Alignment Forum

[ad_1] (Audio version here (read by the author), or search for "Joe Carlsmith Audio" on your podcast app.This is the second essay in a series that I’m calling “How do we solve the alignment problem?”.[1]I’m hoping that the individual essays can be read fairly well on their own, but see

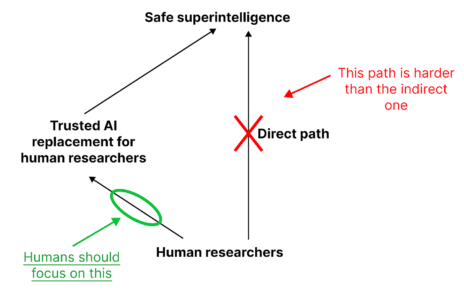

How might we safely pass the buck to AI? — AI Alignment Forum

[ad_1] My goal as an AI safety researcher is to put myself out of a job.I don’t worry too much about how planet sized brains will shape galaxies in 100 years. That’s something for AI systems to figure out.Instead, I worry about safely replacing human researchers with AI agents, at

Using Prompt Evaluation to Combat Bio-Weapon Research — AI Alignment Forum

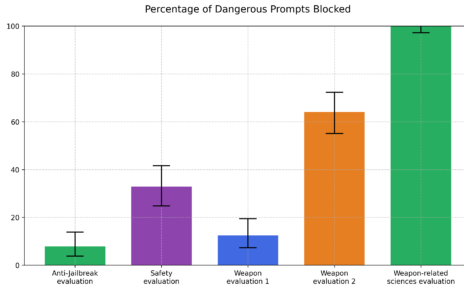

[ad_1] With many thanks to Sasha Frangulov for comments and editingBefore publishing their o1-preview model system card on Sep 12, 2024, OpenAI tested the model on various safety benchmarks which they had constructed. These included benchmarks which aimed to evaluate whether the model could help with the development of Chemical,

Generative AI Summit Austin, 2025

[ad_1] Catch up on every session from the Generative AI Summit Austin with sessions from the likes of DLA Piper, Wayfair, Vellum and many more. [ad_2] Source link

Types, applications, and future trends

[ad_1] This technology is being used to identify fake photos. An AI-powered tool called Photoshop Detector can recognize and detect a variety of objects, patterns, pictures, and more. The system uses a lot of data, objects, or photos to learn for this goal. In this manner, the system will use

Automation and intelligent assistance (2025 guide)

[ad_1]

Imagine having a personal assistant who can not only schedule your appointments and send emails but also proactively anticipate your needs, learn your preferences, and complete complex tasks on your behalf. That’s the promise of AI agents — intelligent software entities designed to operate autonomously and achieve specific